1/12 OpenAI Releases: o1 upgrades and a new ChatGPT tier?!

Should you use o1 and the new tier? (and our Bingo update!)

Good morning!

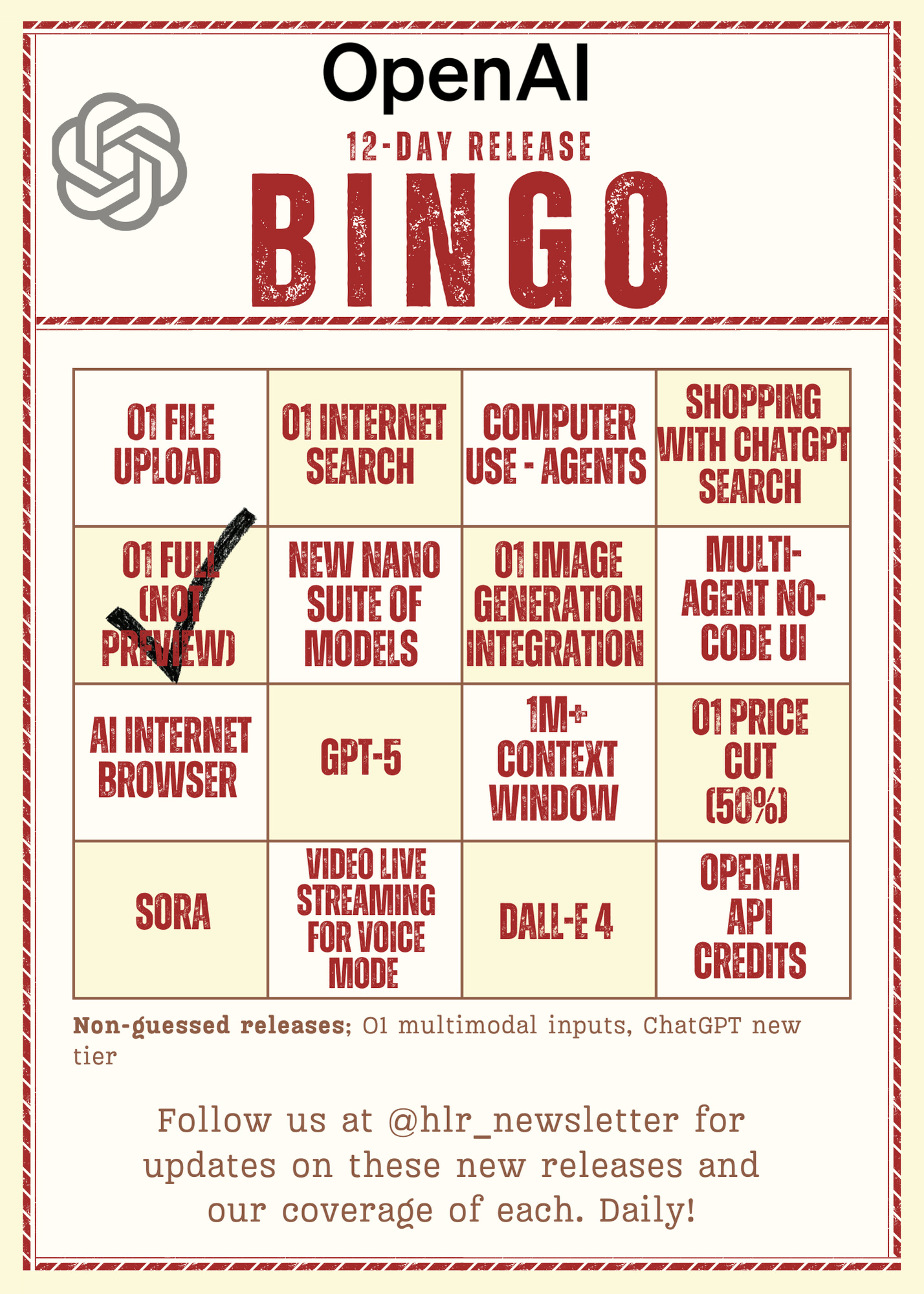

Today, we have our first checks in the bingo thanks to one of the two releases:

o1 multimodal input

o1 full release

ChatGPT new tier (ChatGPT Pro)

Here’s the updated bingo card:

Unfortunately, we guessed the ChatGPT new tier and multimodal inputs but removed them from our bingo to fit the 4x4 space. We were too general (or ambitious) with our file upload guess and got only image upload instead.

Quick reminder: if we have a BINGO!, we will give away five one-year subscriptions to the first five replies we get to this email. Else, you can subscribe here with a nice discount:

Here are today’s OpenAI announcements and our thoughts:

o1-Full

Finally, the full o1 model is out with obviously increased performances across the board.

A less popular but as interesting release is the o1 model card.

Here are some relevant points shared in the model card so that you don’t have to read the 40+ pages:

As you know, o1 uses reasoning capabilities thanks to a new token to “think” before giving a response.

o1 models include a full version (to replace the preview version) and a faster o1-mini optimized for coding.

Trained with reinforcement learning on diverse datasets, including proprietary and in-house sources.

Rigorous data filtering ensures quality and compliance with safety standards. Data is key!!

Improved reasoning performance on complex tasks compared to GPT-4o. (It is probably mostly thanks to added context and token-generated amount…)

Enhanced multilingual competence across diverse languages. Also its able to think in different languages as multi-lingual people often do.

Superior accuracy in refusing unsafe or disallowed content.

Reduced over-refusals on benign prompts. Less overly safe!

Stronger resistance to jailbreak prompts and adversarial attacks.

Improved demographic fairness and reduced stereotypical responses. Primarily, thanks to data improvement, probably!

Chain-of-thought reasoning introduces risks like hallucinations and omissions. (Not fixed thanks to “reasoning”! RAG is still relevant, as we expected.)

External red teaming validated enhanced safety compliance.

o1 Multimodality Input

OpenAI mentioned that o1 can now take on multiple modalities, including images and sound. While this is useful, and we were expecting it, we hoped to have file upload released simultaneously.

ChatGPT New Tier Subscription (ChatGPT Pro)

OpenAI released a new pro tier for 200$ per month to increase performance on the o1 model thanks to providing more computing and GPUs to the pro users, with a bigger GPU budget for these members.

This new pro model is more ‘reliable,’ as shown in the results above. A question is only correct if it is answered correctly four times out of four times.

We assume this means a suite of dedicated GPUs for these Pro members and suspect most people won’t need it.

And to answer the question before it comes: yes, you can use o1 full even with the regular plus tier.

However, a question remains: Is this new tier worth it? For whom is it?

To justify the cost of 200$ monthly, we have to do a bit of math. The difference between the normal tier and the new tier is 200$-20$=180$. After some testing with the API (paying per token usage), we found that solving a medium LeetCode problem costs around 0.41$ (or 660 input tokens and 6,600 output tokens). So, to get your return on this extra 180$ month, you will have to solve 439 LeetCode problems per month to beat the API use (or prompt the model 439 times, assuming a short problem (small context window))! This number is hard to reach for a typical user but can be achieved with heavy prompting. So, in terms of the pure cost of computation, the new tier is definitely not for everyone.

Still, you can justify this price with the maximum prompt rate of o1, which is 50 prompts per week for their standard subscription tier. We don't know yet if there will be a maximum number of prompt rates (most likely not). So if you use it >50 times per week, it becomes a more relevant option.

Another key aspect to consider before going for the monthly offer over the API is that the API prices have lowered in the past few years and will most likely continue to go down.

Reminder - Advice on prompting o1 models

As OpenAI writes in their documentation, these models perform best with simple prompts. “Advanced” techniques, like few-shot prompting or instructing the model to "think step by step," may not enhance performance and can sometimes hinder it.

Here are some of OpenAI’s recommended practices:

Keep prompts simple and direct: The models better understand and respond to brief, straightforward instructions without extensive guidance.

Avoid chain-of-thought prompts: Since these models perform reasoning internally, prompting them to "think step by step" or "explain your reasoning" is unnecessary.

Use delimiters for clarity: Use delimiters like triple quotation marks, XML tags, or section titles to clearly indicate distinct parts of the input, helping the model interpret different sections appropriately. Make sure to read the complete prompt!

As a good rule of thumb, if a reasonably skilled professional reads the whole input and can perform the task well, then LLMs can also complete the task.

Limit additional context in retrieval-augmented generation (RAG): When providing additional context or documents, include only the most relevant information to prevent the model from overcomplicating its response. Check out our iteration on The Rise of RAG.

And that’s it for today’s releases. See you tomorrow with the new releases!