2/12 OpenAI Releases: We can now fine-tune o1!

Reinforcement Learning comes to OpenAI o1 models!

Good morning!

Unfortunately, there was no bingo progress today! Instead, we have something quite interesting for o1: reinforcement fine-tuning (RLFT) or preference tuning. However, you prefer to call it.

Reinforcement fine-tuning differs from supervised fine-tuning (SFT) (already available on the OpenAI platform) because it allows you to train with a non-fixed objective. SFT trains the model for the next token prediction with a ground truth. In contrast, RLFT enables us to train the model on query/answer data pairs. We can now set a grader in the training process, an evaluation function. This grader evaluates how well a prediction is, rather than each token.

So, why would we want to evaluate an answer rather than each token of the answer? First, we cannot do supervised fine-tuning with o1. With SFT, you’d need to give the model the whole input-output sequence and mask the last token to guess the next token to generate. This is impossible in o1’s case because we cannot access all the tokens generated in its thinking process (before the “end_of_thought” token is generated). This means we can’t give a per-token reward, and instead, we have to go for a per-answer reward.

“We encourage research institutes, universities, and enterprises to apply, particularly those that currently execute narrow sets of complex tasks […] We’ve seen promising results in domains like Law, Insurance, Healthcare, Finance, and Engineering because Reinforcement Fine-Tuning excels at tasks where the outcome has an objectively “correct” answer that most experts would agree with.”

RLFT also allows us to have question-answer pairs in our training dataset, which is highly beneficial in o1’s case. It usually has a long chain-of-thought process that we’d need to provide in the SFT case when we want to rate each token. Instead, we only rate the final answer and improve the model from there. We can grade (rate) answers with more than just a correct/incorrect schema.

In OpenAI’s case, you’d tell how the grader should grade the answers and to answer with a number between 0 and 1. 1 means the answer is perfect, and 0 is incorrect. Anything in between allows us to tell the model that it's partially wrong and right, which will greatly impact progressing in the right direction.

You do that by sending dozens to thousands of query-reply examples to grade the replies. We’ll talk more about reinforcement learning (RL) and when you should do it in a complete iteration later on!

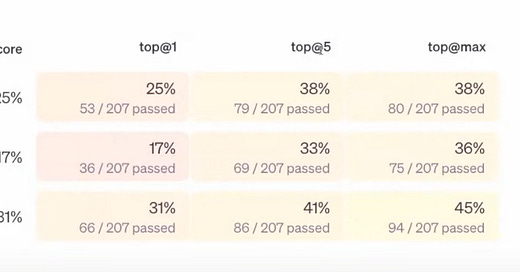

They also showed how it could help o1-mini get better results than o1, with a gene example going from a 25% accuracy to 31% accuracy:

We think that SFT will remain useful for fine-tuning a model to a new specific task, and RL fine-tuning becomes an interesting additional toolset to further push the model towards a desired kind of answer while also allowing it more flexibility in the way it “thinks” of the answer (vs. per-token reward). Finally, it also has the benefit of being easier to do thanks to rating answers rather than having to provide perfect ground truths.

And that’s it for day 2! See you on day 3!

p.s. RLFT is not available yet, but if you have a use case in mind, apply to get access here!

Oh, and here’s the updated bingo card: