Good morning, everyone!

In recent years, we’ve all seen an impressive increase in large language models (LLMs) capabilities. But as advanced as models like GPT-4o and Claude 3.5 Sonnet are, they’re just the starting point for a new generation of AI applications that go beyond answering questions and holding conversations with “agentic AI” and “multi-agent” systems.

What is Agentic AI?

At its core, agentic AI is a system that can act autonomously. You can see them as chatbots with the ability to respond to questions and take actions on your behalf, like when you ask ChatGPT to search online for the latest documentation and then answer your question or prompt Devin to create an app. Think of an agent (aka ChatGPT+tools) as an AI that can initiate tasks, make decisions, and even collaborate with other agents (or people) to complete complex tasks.

“Multi-Agent Systems”?

Multi-agent systems are software architectures that orchestrate multiple LLM-powered agents to work together on more complex tasks. Each agent can be the same base LLM with different system prompts/"roles" (e.g., one gpt-4o instance as researcher, another as editor), different contexts (threads of exchange between agent), different memories (similar to context, but with added logic to “remember” specific things), different LLMs optimized for specific tasks (e.g., o1-preview for complex reasoning, smaller models gemini-1.5-flash for simpler tasks), or LLMs connected to various tools and APIs. The key advantage is breaking down complex workflows into discrete steps handled by specialized agents working sequentially or in parallel. Rather than relying on a single LLM, multi-agent systems coordinate multiple agents to execute tasks simultaneously, leverage specialized capabilities and tools, balance between speed/cost/quality, and handle complex workflows requiring multiple operations. For example, a content creation pipeline might use several agents in parallel: one researching using search tools, another drafting content, and a third editing the output. The multi-agent system handles the orchestration, allowing agents to work together seamlessly while optimizing for performance and cost. This modular design also means individual agents or tools can be swapped out as better options become available.

Agent Frameworks: ReACT, MetaGPT, LangChain, and OpenAI Swarm

Several Agent frameworks have come out since the last two years. Here’s a look at four noteworthy ones that offer different approaches to building multi-agent applications:

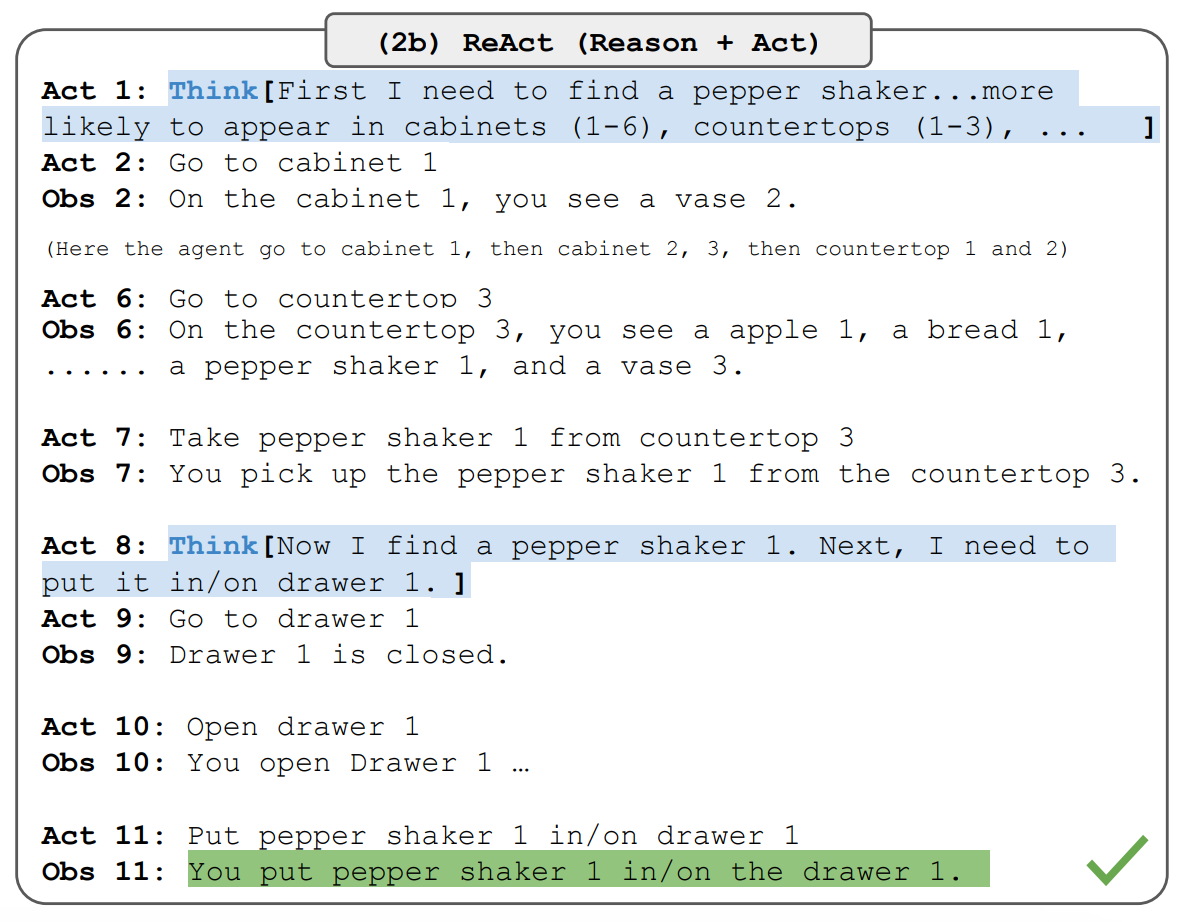

1. ReACT: Combining Reasoning and ACTing

Introduced in 2022, ReACT was an early approach to combining reasoning and action steps in AI. ReACT agents break down user instructions into a sequence of logical steps, processing each step carefully before moving on to the next. This design enables ReACT agents to handle tasks that require both planning and action.

For example, consider an AI system tasked with planning a travel itinerary. Using ReACT, the agent would first break down the request by researching flight options, comparing hotel prices, and evaluating activity recommendations, reasoning through each part to make well-informed choices. Then, it would take action by booking the flights, reserving accommodations, and arranging activities. While powerful for its time, ReACT has been surpassed by more adaptable frameworks that can dynamically coordinate agent roles with greater efficiency and reduced error rates.

2. MetaGPT: Specialized Multi-Agent Collaboration

MetaGPT, introduced in 2023, was one of the first frameworks to structure agents in a collaborative, “team-based” format. Each agent in MetaGPT has a defined “expertise,” leveraging Standardized Operating Procedures (SOPs) to work together efficiently, like a team of specialists, to complete complex tasks. This made MetaGPT ideal for applications where tasks need to be divided into smaller, specialized roles.

For example, in a product launch scenario, MetaGPT can break down the work: one agent might focus on generating marketing content, while another drafts technical documentation, and yet another handles customer support FAQs.

After conducting several tests with the framework, its limitations became evident. First, the process is token-intensive, leading to increased costs compared to managing the back-and-forth manually. Second, by default, LLMs are not up-to-date and lack online search capabilities, which often results in generated code referencing outdated or incompatible packages. This means initial tests frequently fail due to version compatibility issues. Third, while MetaGPT allows for re-prompting—meaning starting from an existing project and adding requirements or refining it—making specific changes still requires manually correcting bugs or making improvements. This involves reading and reviewing all the generated code and documentation to understand why the agents made certain decisions, which requires significant effort.

You can find interesting use cases in the MetaGPT documentation. It performs well in certain scenarios, such as imitating a web page, web scraping, and data analysis. However, don’t expect perfect results on the first try. It’s a process of testing, trial, and error, and you may discover that your task is simply not achievable with a multi-agent framework.

However, newer agent frameworks have refined these approaches with better coordination and optimized processes for faster task completion and reduced back-and-forth, making MetaGPT and ReACT less popular as the technology evolved.

3. LangChain: Connecting LLMs with Tools

LangChain is a popular framework designed to facilitate the creation of systems that let LLMs interact with external tools, APIs, and data sources. Unlike MetaGPT or ReACT, which focus on agent collaboration and reasoning, LangChain emphasizes integration, allowing LLMs to perform complex operations by connecting to external resources.

With tools, these models can handle tasks that require access to real-time information, databases, or APIs. For instance, a LangChain LLM might pull data from a financial API, analyze the information, and generate a market analysis report. This makes LangChain an ideal choice for building applications that need to interact dynamically with external data sources, giving LLMs a more extensive and practical range of functions.

4. OpenAI Swarm: Lightweight Agent Orchestration

OpenAI’s recent experimental Swarm framework introduces a streamlined way to manage multiple agents. Swarm is designed to be lightweight, focusing on easy orchestration and scalability of agents. Agents in Swarm can hand off tasks to each other as needed, creating a workflow that allows for dynamic conversation flows and complex problem-solving. Swarm operates using OpenAI’s Chat Completions API (or others) and runs statelessly between calls, emphasizing flexibility and control. While still experimental, Swarm is being explored for education and research purposes and is especially suited for simpler multi-agent tasks that still benefit from flexible agent management and coordination.

💡 We suggest trying those tools for repetitive tasks with many overlaps, such as designing a frontend page for multiple brands in a very similar way and changing small parts. It is not worth the time investment for a one-off project.

Swarm is a more general case of the MetaGPT project. But here you define yourself how the agents exchange messages and the order of the agents in the pipeline. It is more flexible and more suitable for broader tasks (not only programming ones), but it also takes more trial and error and usually longer to find a good solution. In this case, with great power comes greater cost.A Note on AutoGPT and BabyAGI

When AutoGPT and BabyAGI came out in 2023, they generated a lot of hype by attempting to create fully autonomous AI agent systems that could independently set goals, execute plans, and iteratively refine their approaches. The initial excitement was due to the promise of agents that could achieve “self-directed” intelligence. They were the first to leverage the power of recent LLMs collaboratively. However, these systems often ran into issues like repetitive task loops and difficulties in managing memory and context across complex tasks. As a result, they’ve largely fallen out of favor as developers turned toward more controlled and task-specific frameworks (e.g. Cursor for coding, ChatGPT or Claude for writing and documenting, etc.), which offer greater reliability and fewer errors in real-world applications.

Serial vs. Parallel LLMs: A Differentiating Factor

With frameworks like LLMCompiler, we’re seeing a significant development in how we structure LLM-powered workflows: serial vs. parallel processing. In a serial setup, as in ReACT, each task step is handled one after the other, making it ideal for workflows where each stage depends on the outcome of the previous one. Serial workflows are generally simpler and effective for straightforward, linear processes. However, they can be slower, especially if tasks are lengthy or require extensive computation.

Parallel processing, on the other hand, allows multiple tasks to run simultaneously, distributing the workload across multiple LLMs or models. This approach can lead to significant time savings, as different parts of a task are completed independently. For example, in a multi-agent setup for customer support, one agent can handle data retrieval while another processes language translations—both happening in parallel for faster outcomes. LLMCompiler and similar tools increasingly leverage this parallel processing capability, making it easier to build robust, efficient, and faster multi-agent workflows. The task obviously needs not to be a prerequisite for this approach to work. A combination of both approaches is ideal.

Using Claude's New Features for Agentic AI

Anthropic’s recent update to Claude introduces a feature that showcases the growing potential of agentic AI: “computer use.” Still, in public beta, this feature allows Claude to operate like a virtual assistant that can navigate a computer screen, click buttons, and fill out forms—simulating how a person might interact with software. It’s a powerful step forward, enabling more autonomous and adaptable multi-agent workflows.

In essence, the new “computer use” capability could allow Claude to operate like a true digital assistant, with the ability to interact with everyday software and web applications, rather than being limited to text-only interactions. This is particularly valuable in multi-agent systems, where Claude can function as a specialized agent for web-based workflows, interacting with software to carry out tasks that extend beyond what traditional LLMs could manage on their own.

Getting Started with Multi-Agent Systems

Creating a multi-agent setup can sound complex, but new tools are making it accessible, even for those without coding skills. Platforms like Integrail and other multi-agent system (MAS) managers now allow you to design and deploy agents with no-code interfaces.

Important Considerations for Deploying Multi-Agent Systems

Despite the power of agentic AI, there are some important factors to keep in mind:

Role Definition: Clear roles are essential to avoid overlap and ensure each agent performs its assigned task without stepping into another agent's area of expertise.

Error and Hallucination Management: When multiple agents interact, there’s a risk that one agent’s error could influence the entire system. Testing and fine-tuning can help mitigate this, as can choosing reliable models for critical tasks: leverage templates (like SOPs) and strict generations, as in MetaGPT, to limit hallucination scaling.

Human Oversight: For high-stakes applications, having a “human-in-the-loop” to validate outputs is important, especially for decisions that require nuanced judgment or creativity.

Integration Testing: Since multi-agent systems often interact with external tools, thorough testing of these integrations ensures that your agents operate smoothly and without unexpected issues.

In short, agents really are just about using an LLM or other model with a tool integrated into it or access to additional data and different system prompts. What is powerful is that they can work in parallel and do much more than a single LLM could ever do, similar to plugging the OpenAI O1 model with tool APIs to be able to think and act autonomously (as in ReACT). The main danger is hallucination scaling since all agents will be susceptible to hallucinating, and they can be piled up in the system, so make sure you are experimenting heavily and restricting LLMs to what you know is true.

hello Louis-François

Please could activate translations in french for alls yours publications.

Merci beaucoup

Bruno