Good morning everyone!

We’ve decided to shift the goal of the newsletter a bit. We will now focus on applicable, real-world problems/questions and explain how we solved (or would solve) them.

The most common request we get from clients concerns the whole model-training dilemma: Should you use your own model? Should you train it? Should you fine-tune it? Should you host it?

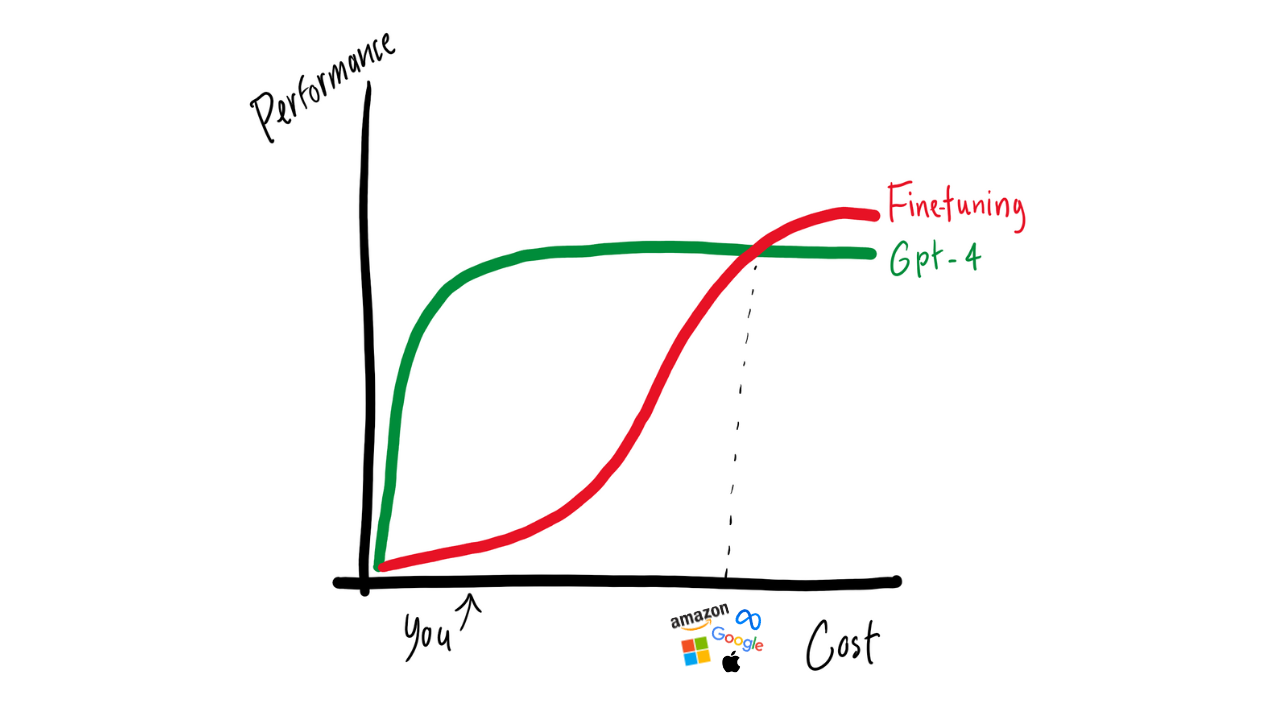

Many clients come to us wanting to fine-tune an open-source LLM, such as LLama3 8B, thinking it will achieve the same level of performance as GPT-4 on specific tasks. While it's true that you can get good performance through fine-tuning, we usually don't recommend starting with this approach for an MVP or initial version. Fine-tuning is not a trivial task and involves significant time and cost investments.

Here’s why:

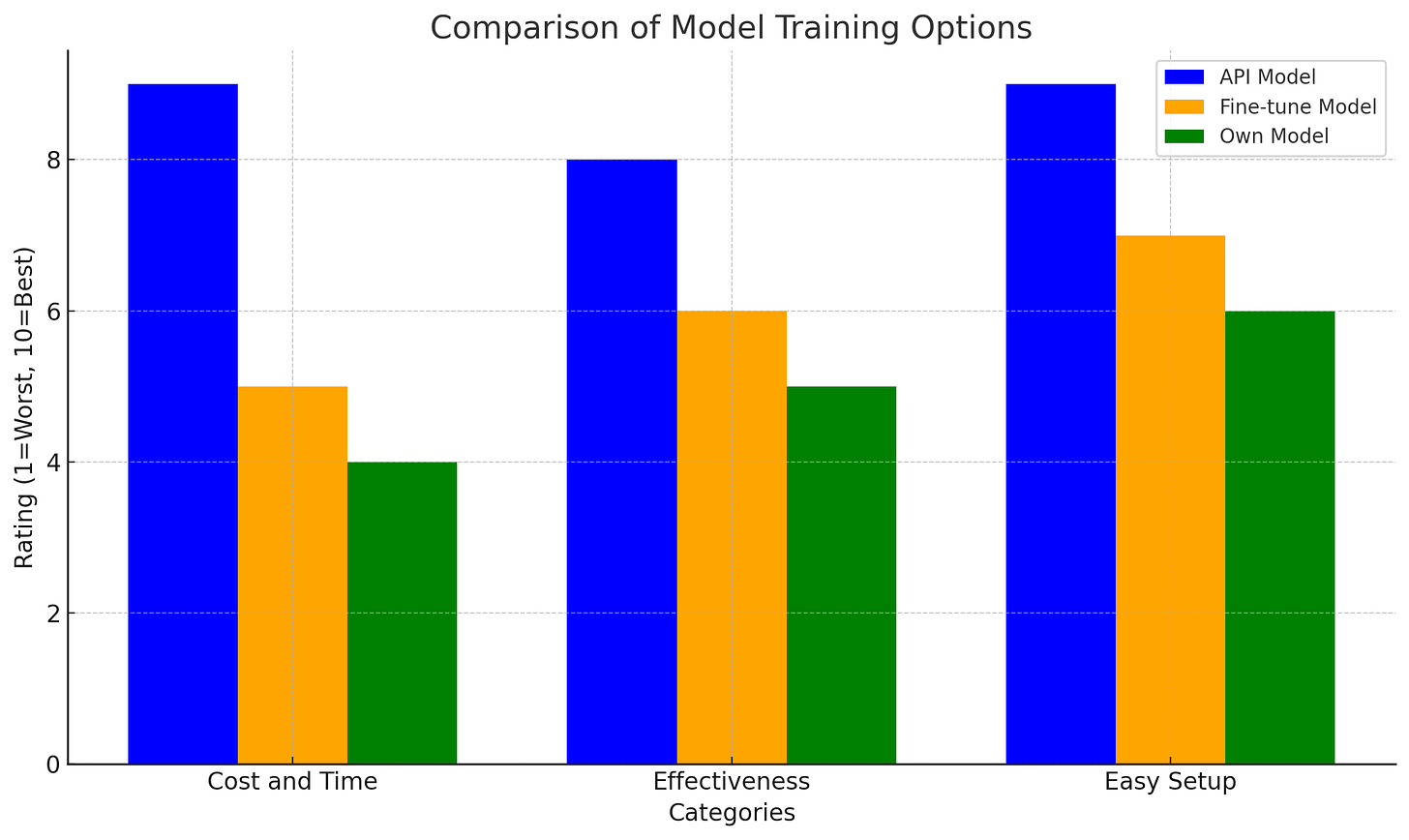

Cost and Time: The truth is that fine-tuning is costly and time-consuming. We recommend instead, that you build a low-cost prototype with OpenAI’s GPT-4o or even Claude’s Sonnet.

Effectiveness: From our personal experiences, using a proprietary model through an API almost always wins. This is especially true if your budget is limited, or if you are unsure a LLM can do what you want.

Easy Setup: Using LlamaIndex to combine prompting with Retrieval-Augmented Generation (RAG) is much quicker and easier. Ovadia et al. demonstrated that RAG consistently outperforms fine-tuning for knowledge encountered during training and entirely new knowledge.

Updates: Continuous fine-tuning. You will have to retrain as information changes and with new and better models. OpenAI or Claude does it for you, and a RAG database is easy to update and maintain.

Fine-tuning would be worth considering only if:

GPT-4 + RAG cannot meet your performance expectations on your tasks.

You already have a working MVP using paid APIs and want a cheaper/faster model.

You have a team member with the know-how, e.g an ML Engineer, since fine-tuning is complex and time-consuming, or you have the money to pay the salary for one.

We will tackle the question of hosting a model yourself next week!