Good morning everyone!

Today, we are changing course a bit from what we said because of an interesting read one of us saw. We’ll discuss model hosting next week instead. In this one, we’ll explore the fascinating challenge of working with probabilistic models, such as large language models (LLMs), and how it differs from traditional software engineering.

When dealing with conventional software engineering, we write code that is executed in a fully predictable environment. The result doesn’t change between runs. We have full control over the logic and can anticipate the results of our programs. We also rely on unit tests to validate each part of our code, ensuring everything works as expected. Typical tests in software engineering check the correctness of rules coded, error handling, or response times.

On the other hand, working with probabilistic models is completely different. Here, we deal with systems that produce outputs based on probabilities and learned patterns from vast datasets. Nothing is fixed or completely deterministic. Unlike regular code, the results can vary even with the same input, introducing a layer of unpredictability. This unpredictability is both a challenge and a feature, as it allows models to generate creative and nuanced responses. You can easily test this on ChatGPT when you regenerate a response from the model. It will generate different outcomes each time.

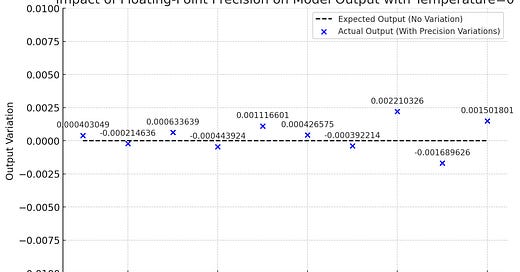

You may say that you can just drop the temperature to zero. However, for various reasons, including variations of server hosting or floating-point precision limitations in neural network computations, even subtle numerical variations can accumulate and lead to different outputs. These tiny discrepancies can cause the model to take slightly different paths during text generation, resulting in variations despite the deterministic setting, which we don’t have to look for in “regular” software.

For example, in the image below, we try to generate 0.00000, but since we work with floating points (the numbers after 0), we have ended up with values around the 0 line and not exactly it on the 10 different runs.

Of course, this is an exaggerated example, but you see how different predictions can appear after thousands of computations with such tiny variations.

Key Differences:

Unit Tests vs. Model Evaluation:

Unit Tests: In regular software, unit tests check specific functions or methods, ensuring they behave as intended. For example, a unit test for a function that adds two numbers would ensure it returns the correct sum every time.

Model Evaluation: With LLMs, we use different benchmarks, such as BLEU scores for translation, ROUGE scores for summarization (which basically compare two series of words and give a score of how close they are), or human evaluations for more subjective tasks. These benchmarks assess the model's performance on a range of tasks, often involving large datasets to gauge its generalization ability. They tell us how good the model is for some kinds of tasks but not if it behaves well (or as intended) in all cases.

Deterministic vs. Probabilistic Outputs:

Deterministic Code: In traditional software, the same input will always produce the same output, making debugging and testing straightforward.

Probabilistic Models: LLMs generate outputs based on learned probabilities, which means the same input can yield different results. Since it is impossible to test every case, teams often rely on metrics tied to business outcomes to evaluate the model’s performance.

Benchmarks and Metrics:

Regular Software: Benchmarks might involve response times, memory usage, or throughput, such as how quickly a web server can handle requests.

LLMs: Benchmarks include accuracy, precision, recall, F1 scores, and user satisfaction metrics. These benchmarks are critical for understanding how well a model performs in real-world applications but don’t tell us everything. We need to wait for production and do quick fixes regularly. Models need to be monitored even more consistently than regular code.

Maintenance and Updates:

Traditional Software: Updates typically involve bug fixes or adding new features, usually requiring updates to the codebase and corresponding unit tests.

LLMs: Models require continuous retraining to incorporate new data and improve performance. This process can be resource-intensive and necessitates robust data pipelines and infrastructure. It’s also much harder to understand the impact of a newly trained model since most of the information about its performance is known from the actual users.

Practical Examples:

Unit Test in Regular Software:

def add(a, b):

return a + b

def test_add():

assert add(2, 3) == 5

This is quite straightforward.

Evaluation of an LLM: Suppose we're evaluating an LLM's ability to summarize text. We might use a dataset with known summaries and compare the model's output using ROUGE scores.

from rouge_score import rouge_scorer

scorer = rouge_scorer.RougeScorer(['rouge1', 'rougeL'], use_stemmer=True)

score = scorer.score("The model generated summary.", "The reference summary.")

print(score)

This would only give us information on one of our LLM's abilities and might not accurately represent what the users want from it.

Adapting to Probabilistic Systems:

When working with probabilistic models, embracing investigation and adaptability is essential. Here are key considerations for improving decision-making under uncertainty:

Investigation: Design tests with clear objectives and metrics to guide your decisions. If the results are not positive, establish conditions for reevaluation.

Metrics and Outcomes: Traditional test coverage doesn't apply here. Focus on outcomes and metrics that drive decision-making. For instance, improving precision might enhance user experience.

Documentation and Decision Making: Share testing results with your team. This collective learning helps refine models and approaches.

Focus on Business Metrics: Ensure investigations align with business outcomes.

Structured Outputs: Use libraries like Outlines or Instructor to obtain data structures rather than strings from LLMs. This approach simplifies data handling and analysis by providing structured outputs directly from the models.

In conclusion, while traditional software engineering offers more control and predictability, working with probabilistic models requires embracing uncertainty and leveraging sophisticated evaluation statistical metrics to ensure performance.

As mentioned in the previous iteration, next week, we'll discuss hosting a model yourself and weigh the pros and cons of this approach. We’ll also surely discuss using structured outputs for LLMs soon. Stay tuned!